How to Ensure App Quality in a Startup: Fast Iteration and Automation Strategies

As a startup, your time to market is crucial. You need to create a product that can compete with established players, but you also need to do it quickly. While it's important to think about building a better and scalable product for millions of users, it's a waste if you have no users. Startup time should be spent primarily on things that bring in new customers.

The problem with fast development is that quality can suffer if it's not a priority. So, how can you ensure app quality in a startup? In this article, I will discuss fast iteration and automation strategies that can help you create a quality product.

The balance between speed and quality

In a startup, speed and quality are two sides of the same coin. Moving fast is essential to stay competitive, but you must also ensure your product is of high quality. Focusing only on speed can result in a product full of bugs and usability issues. Conversely, focusing solely on quality may cause you to miss opportunities to get your product to market before competitors.

In my experience, it's better to lean towards speed while acting promptly. It's impossible to test everything and ensure 100% app quality. Instead, aim to catch the most common bugs in production. However, doing so requires careful planning and strategy.

What to test and what not?

Testing is an essential part of ensuring app quality. However, not everything needs to be tested. Hence, It's important to prioritise what needs to be tested and what doesn't.

What to test -

App UI including Forms, SignUps, Button actions etc - User Conversion critical steps that can results in users drops due to poor experiences.

End to End Testing - Test the whole UX flow of the App in different scenarios

Responsiveness and accessibility across devices - Things like scrolls, Button touch

Load times and Performance

What can be skipped -

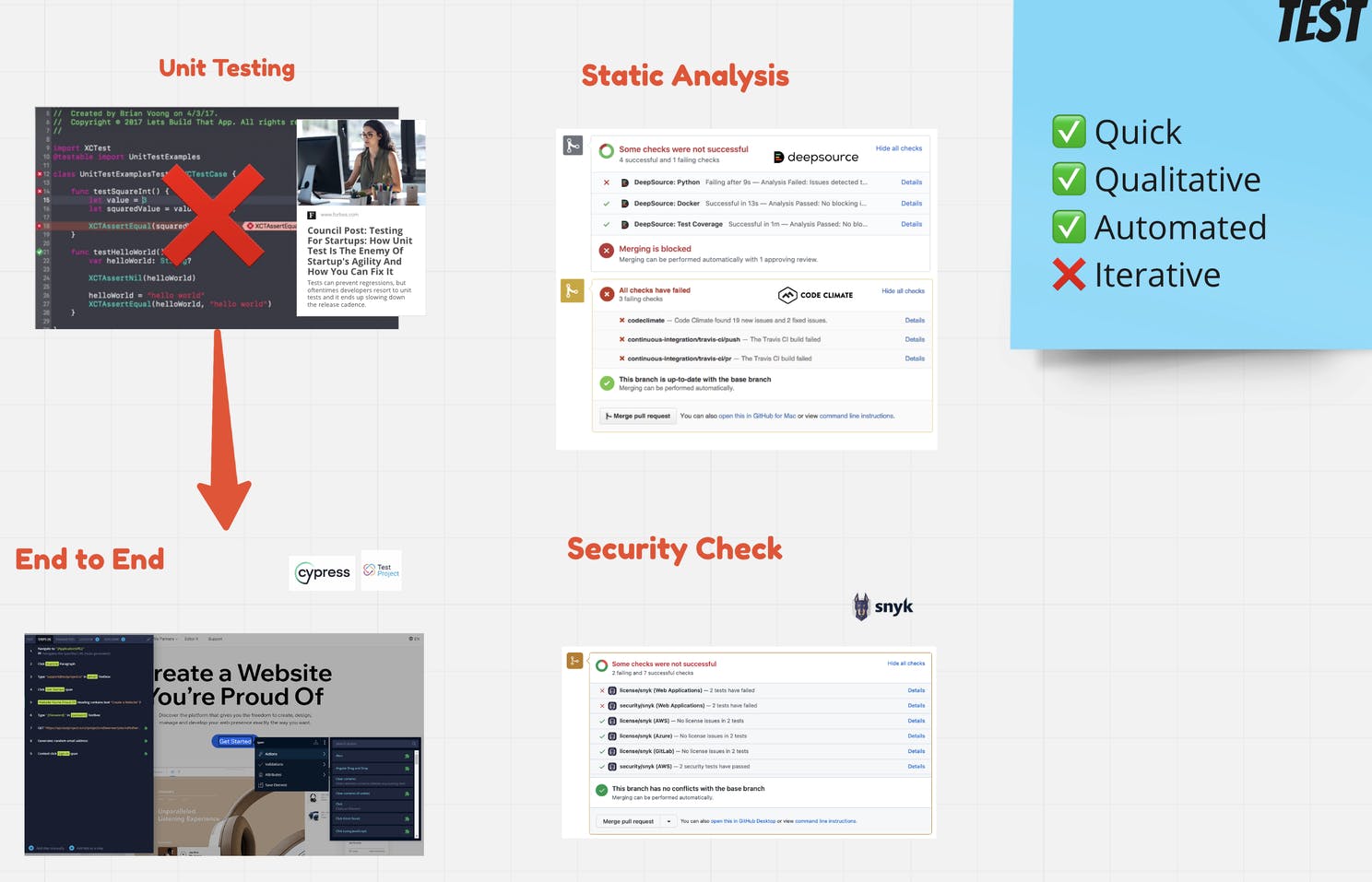

Unit testing - As much its almost a development norm, its been found that it only help app performance to a certain extent compared to the effort that is put into creating these test which can be utilised elsewhere.

Integration test with each system - Startup tech should not be complex anyways. If your going with a microservices design, still it should be done coherently during the development cycles.

Component Testing - Covered by the UI testing anyways.

Regression Testing - Not needed as there are not many different scenarios that can have unforeseen circumstances. Have different scenarios in End to end test instead.

Sanity Testing - Even I don't understand how it helps.

System testing - In case of mobile/ web, cross platform + Responsive test replaces this test.

How to test?

The right testing strategy for startup is a hybrid combination between manual and automated which are as flows -

Automated Static analysis tool that is triggered with every feature/code merge.

Set up Automated end-to-end testing tools on staging preview.

Roll out the preview to the internal team first and fix things promptly. Take the help of automated live preview tools.

Once you are done with catching most of the bugs from the previous steps, release the product to some beta users using feature flagging. Use Issue tracking to catch bugs and fix them fast.

Again when you have caught enough bugs in step 4 then go ahead and do a full rollout. Again keep an active watch from bugs in production using error trackers and fix them fast.

Iteratively doing this will not only reduce your testing overhead but also prioritize the nastiest user facing bugs first. Let's take a closer look at them where I will also show some of the tools that can be used for each step -

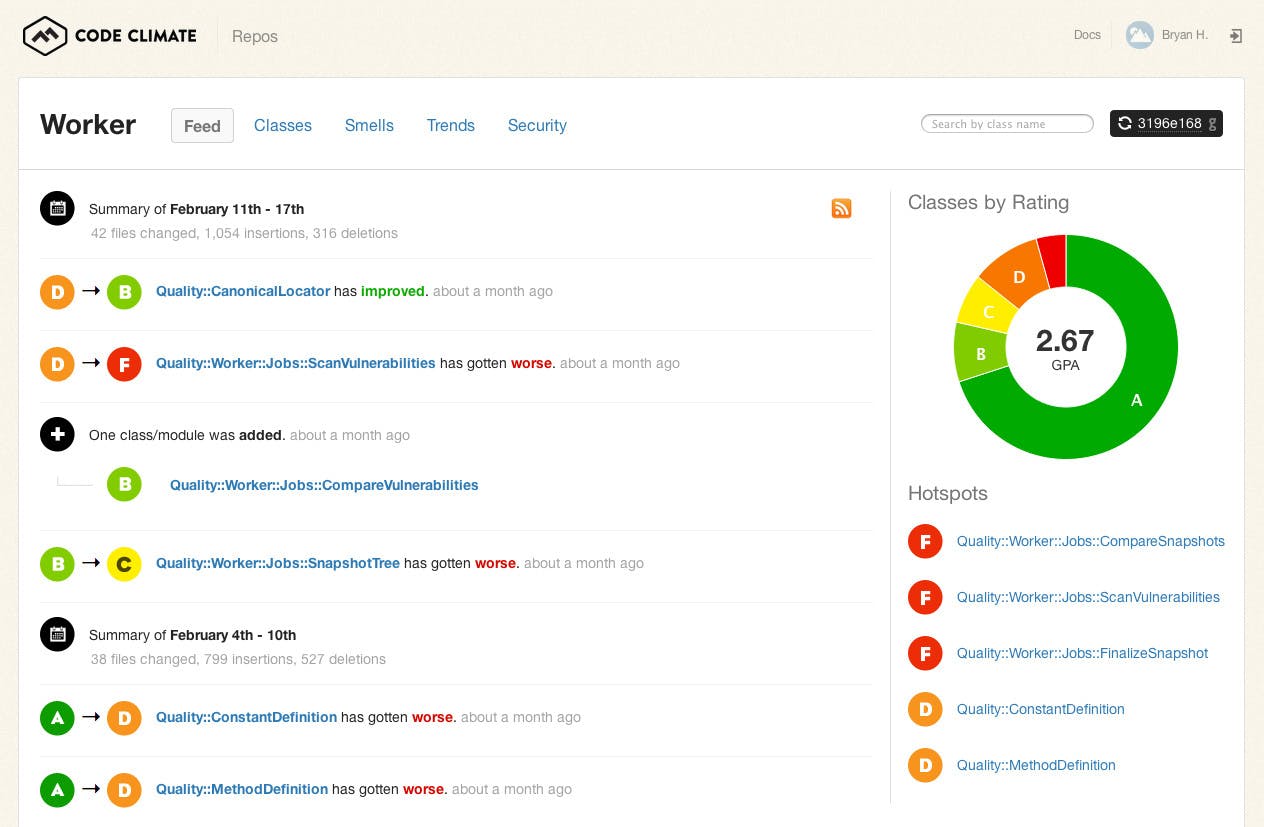

1.Static Analysis during code merge

Static analysis, also called static code analysis, is a method of computer program debugging that is done by examining the code without executing the program. The process provides an understanding of the code structure and can help ensure that the code adheres to industry standards.The reason why this is good is because its completely automated and identifies common mistakes that results in bugs.

CodeClimate Dashboard showing total code quality

CodeClimate automatically scans every pull request to identify potential issues.

2. Automated End to End Test

Also known as E2E test, is a way to make sure that applications behave as expected and that the flow of data is maintained for all kinds of user tasks and processes. This type of testing approach starts from the end user's perspective and simulates a real-world scenario.

You can replicate step by users steps that are automatically performed with tools such as Cypress.io and TestProject. I especially love Testproject due to its ability to create test entirely with click actions.

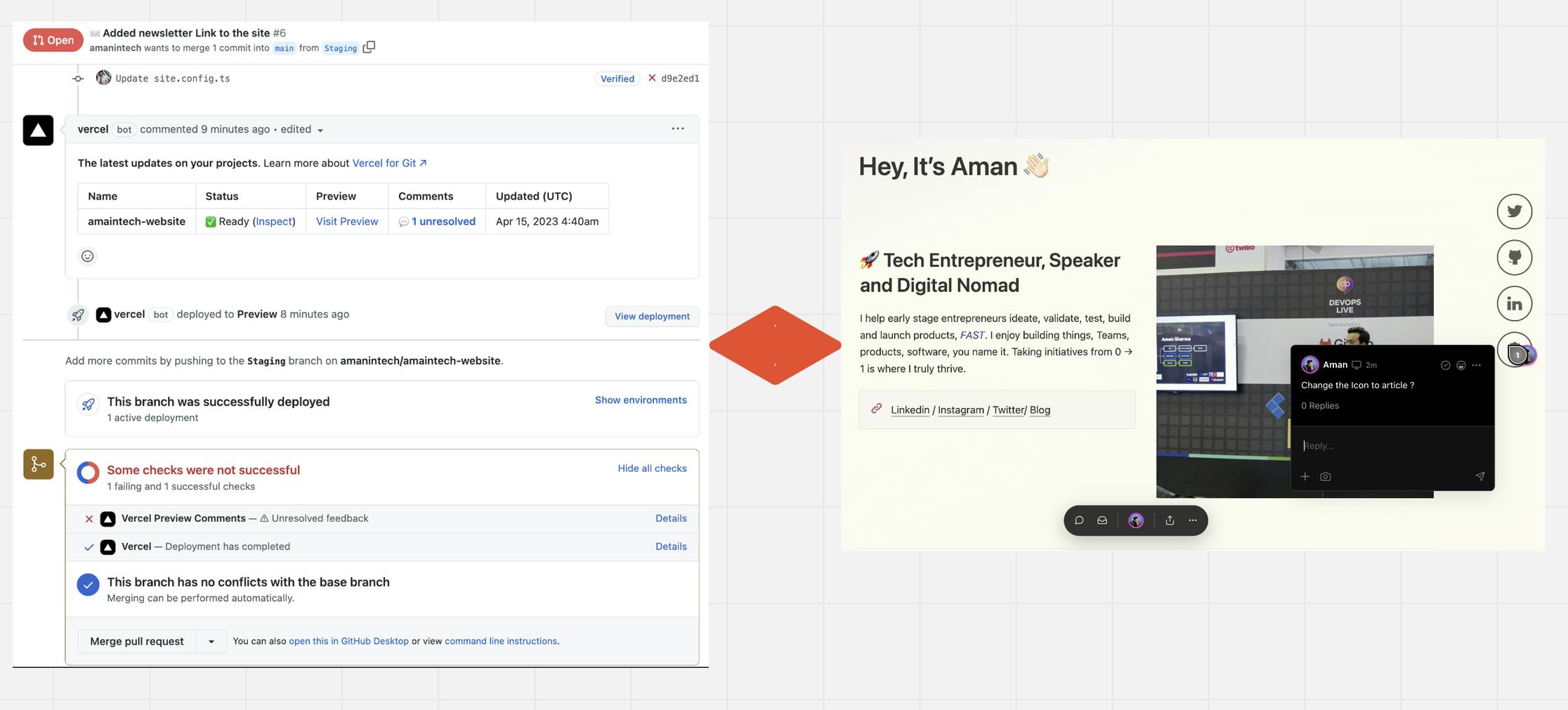

3.Internal Preview

Once the feature development is complete, you can create a preview link thats only accessible by the internal team. I have user Vercel preview link in past which is really amazing. It automatically creates preview link every time a new pull request is created. Then this preview link can be shared with other members of the startup and they can directly add there feedback on the webapp itself, just like a shared Document. The merge is not allowed until all the feedbacks are resolved. Not only this saves time by not requiring to deploy the frontend every time a new change is made but it also help in collecting feedback easily directly to the developers.

Vervel preview generates links for live feedback

4.Beta Rollout with Error tracking

By this time you should have already caught 80% of the bug. In this next time we will rollout the specific release to limited number of users ( ~ 10% based on some certain criteria ). There are 2 ways to do this either the staging link could be setup at the website proxy like Cloudflare that redirect beta users to the stage links. Another method is to use A/B testing or feature flagging. Then use a error tracking tool such as Sentry (sentry.io) and fix the issues that are faced by most number of users. This way you fix those issues first that are user facing. Continue to fix these on staging till no new bugs are coming.

Sentry issue tracking dashboard

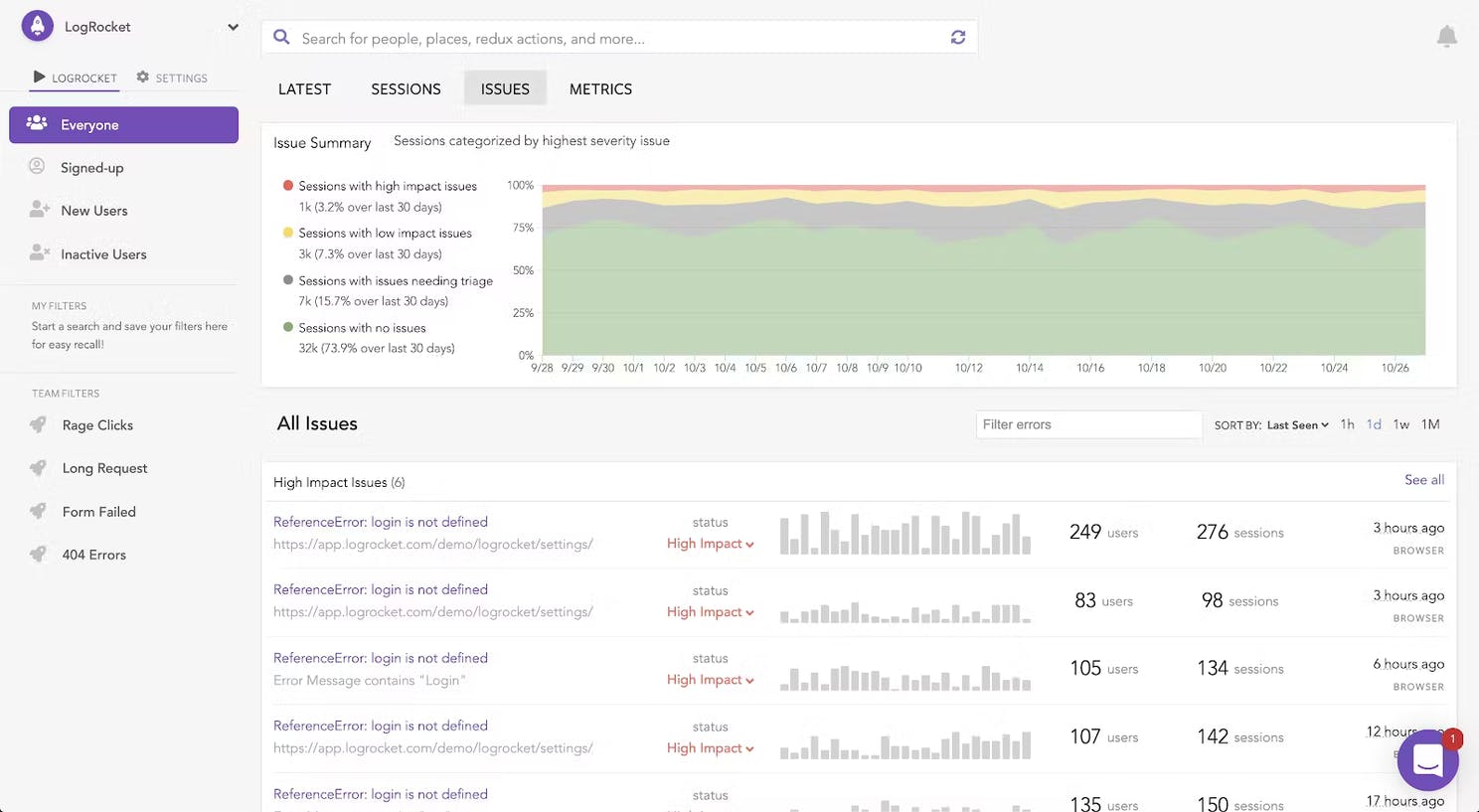

5. Full rollout with performance and log monitoring

The app is already as good at it can get, but there are still some unforeseen issues that can happen in real life Scenario such as huge surge of users or request too long blocking other requests. For this a performance monitoring tool should be settled such as New Relic, Inc. which monitors application load and also shows request that are taking too much time and blocking other processes. In combination with that use a Log monitoring tool to really know whats going wrong in production. I have used LogRocket in past which systematically organises all trails and also monitors application performance on various devices.

New Relic Dashboard

Log Rocket Dashboard

Summary

Ensuring app quality in a startup is possible, but it requires the right strategies. By prioritising what needs to be tested, using automated testing tools, and ensuring a fast iteration cycle, you can create a quality product that can compete with established players in the market. Make product quality a culture in the company just not a process by including everyone and giving ownership of different stages to different members of the team.

Additionally, by measuring and monitoring app quality and keeping technical debt to a minimum, you can ensure that your app remains of high quality as you continue to iterate and improve your product.

I hope this would have helped you in speeding up your startup building journey while also learning new techniques to ensure quality.